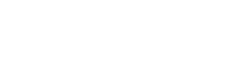

In recent developments, reports have surfaced regarding the Israeli military’s purported use of artificial intelligence (AI) tools to identify bombing targets in Gaza. A video from over a year ago has resurfaced on social media, showcasing an official discussing the application of machine learning techniques for target identification.

The video, filmed at a conference in February 2023, features an official identified as ‘Colonel Yoav’ from Israel’s cyber intelligence agency, Unit 8200. In his presentation, Colonel Yoav detailed how machine learning was utilized during the 2021 offensive in Gaza, specifically to locate Hamas squad missile commanders and anti-tank missile terrorists.

The official described a process where machine learning algorithms were employed to analyze data and identify potential targets based on known information about terrorist groups. He emphasized that while AI tools assisted in identifying targets, ultimate decisions were made by human intelligence officers.

Colonel Yoav highlighted the benefits of employing AI tools in warfare, citing the ability to react swiftly with data-driven solutions during battle. He noted that feedback from intelligence officers was used to improve the algorithms, leading to the identification of over 200 new targets.

These revelations align with recent reports from Israeli intelligence officials who disclosed the existence of an AI-based tool called “Lavender.” The tool purportedly assisted intelligence officers in identifying tens of thousands of potential targets during the bombing campaign in Gaza. However, the Israeli Defence Forces (IDF) have denied using AI specifically to identify suspected terrorists, though they acknowledge the existence of the tool.

The IDF’s denial comes amidst conflicting accounts from intelligence officials regarding the use of AI in target identification. While the IDF refutes some claims as “baseless,” they have not directly disputed the existence of AI tools like Lavender.

The controversy surrounding the Israeli military’s use of AI for target identification raises ethical concerns and prompts discussions on the role of technology in modern warfare. Critics argue that reliance on AI in military operations may raise questions about accountability and human rights implications, particularly in conflict zones like Gaza.

As debates continue, the Israeli military’s utilization of AI tools in warfare remains a topic of interest and scrutiny both domestically and internationally.

Comments